From a fascinating and wide-ranging talk (2011, copied here from an old blog) at the Pervasive Media Studio by Prof. Chris Melhuish of the Bristol Robotics Laboratory… The focus was the challenge of making robots that can operate socially, i.e. in everyday settings with humans – e.g. in the domestic environment or in healthcare. I was struck by the resonances between the practical engineering and interface issues of robotic design with (human) phenomenology.

‘We need new bodies’

‘We need new ways of controlling these bodies’

Shared intentionality needs to be developed, a background sense of the other as a candidate for co-operative agency. A joint attentional frame. Social intelligence must exploit mulitmodal communication. Gesture is important: for attracting and directing attention and emphasis (Gricean communication). This should not just be speech or conversation based (in the classic AI tradition) but requires physical interaction. The robot BERT 2 has ‘gaze detection’, with the aim of understanding what a human is looking at. The robot needs to read (and communicate?) desire. To link prosody with speech. Gesture is required to develop co-operatively generated conceptual space.

The robot has a sense of self in space, a body map, an understanding of where it fits into the model. Proprioception.

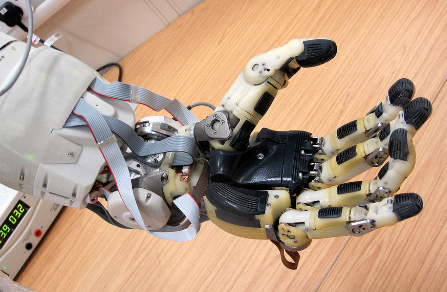

Touch, too. Whiskerbot, shrewbot. Fingertip / tactile sensing. The displacement of papillae. Challenge now to make sense of stimuli, to create a model of the object touched.

New bodies are needed to embody this intelligence.

Sheer mass of robots is main factor: how to safely control these forces in a social context. Working on electrically activated polymers that function like muscles, with the aim of developing more fluid robots.

Current designs for robot hands are well-actuated but under-sensed.